Top iOS 11 Features That Matter Most for App Developers

There are dozens of new features coming out this fall in iOS 11, and developers now have limited access to Apple’s first developer beta, unveiled last week at the company’s annual Worldwide Developers Conference (WWDC).

There’s nothing we can build into commercial apps just yet, but I and thousands of my developer cohorts are now digging into the new features and updated toolsets, as we try to identify opportunities to build new and improved app-based experiences.

For the clients we at ArcTouch serve — ranging from startups to the Fortune 500 — we’ve put together a list of the top iOS 11 features we think will matter most when Apple releases iOS 11 in the fall.

Natural Language Processing

iOS 11 has been supercharged by Apple’s focus on machine learning, and specifically its work in natural language processing. With greater intelligence, the OS will recognize names of people and places, along with understanding addresses and numbers that comprise a phone number. Phrases can also be linked automatically to related content — for example, if you search for “hike” in an app you’ll find related content even if it’s not an exact match (like hiked or hikes), something Google has historically done very well. It’s also possible to trigger changes to UI based on text input — for example, a phrase written in a different language could trigger an app to instantly change its UI into that language — a feature I’m looking forward to exploring more. iOS11 can even detect the sentiment of text input — imagine an app that can detect your mood and respond with content that might improve it.

Together, these capabilities have the potential to make a big improvement to user experience in apps.

SiriKit

SOURCE: Apple

When Apple first introduced SiriKit in iOS 10, it wasn’t quite as open to developers as I’d hoped — as I wrote last year, we were given some capabilities to build fairly basic voice interactions but limited to seven types of applications. But that’s about to change with a major update of SiriKit in iOS 11.

For the first time, apps can offer transactional features through voice control. For example, in a banking app, users can ask to transfer money, see balances or pay bills using voice through Siri. It’s possible to create and edit notes and lists, or update a grocery list in a shopping app. Or, if you want to display your Snapchat Snapcode, you could ask Siri to show it on the screen and then share with your friends.

Voice is becoming an important interface because of the benefits it offers — it gives people hands-free access to apps when they need it (e.g. when driving) and in many cases it’s the fastest way to launch an app or get information. Supporting SiriKit should be a high priority for app developers as voice grows in importance.

Barcode recognition

QR codes have been a favorite tool of marketers in the past because it allowed them to take users to a website or app. But the fact that this practice required people to download a scanning app was a huge barrier. Now, the iOS 11 camera app supports automatic barcode recognition, which means anyone with an iPhone can, in one step, snap a picture of a code and take an immediate digital journey to the intended app or website. It’s a feature that the operating system will provide for free in iOS 11 and should be leveraged by all app marketers in order to improve discoverability.

Face detection and tracking

As part of Apple’s new Vision framework, apps that provide either real time image/video capturing and/or image handling will be able to detect faces and facial features. The best part is it’s very simple for developers to take advantage of this feature for image-based apps. For example, apps could easily add a virtual accessory to someone’s face (e.g. a mustache or a hat) in real time. Since iOS 11 does the heavy lifting, it doesn’t require the same level of developer skill as custom building the same capability in previous generations of the iOS. The operating system also offers great tools to help with the placement of interface elements on a specific place within the image.

Object tracking/recognition

In iOS 11, it’s now possible to detect objects in real time. Through Vision, Apple has provided frameworks to detect many different types of objects in the real world. This means it will be possible, for example, to point your camera at a food item and have an app that searches for recipes that includes that item. Or, if you’re traveling, you might point your camera at a historic landmark and an app can give you information on that landmark.

People tend to be curious about their surroundings and sometimes they rely on apps to provide answers to questions that they have. Building in support for object tracking can make an app really meaningful for a user.

Handwriting/text detection via Vision

Through its improvements in machine learning, iOS 11 can now take handwriting or words on an image and translate them to text. So, for example, if you want to write pen and paper, you could theoretically use the iPhone camera to take a picture of notes and have them converted to text. There’s is a lot of testing to be done here, but this opens up great potential use cases that take advantage of offline printed content and convert them into digital content.

Augmented reality through ARKit

With the launch of ARKit, Apple is giving augmented reality a big boost. ARKit offers a set of frameworks that can help you do things like insert virtual objects into the world around you through the device camera. The barrier to create games like Pokémon Go will be lower, as Apple provides the toolkit to make augmented experiences much more interactive. One particularly interesting use case is placing virtual household objects on top of real world objects; for example, you could place a high-fidelity rendering of a sofa in your living room. E-commerce apps, in particular, should start leveraging these tools as a way for users to virtually try items before they buy them.

SOURCE: Apple

Enhanced push notifications

In iOS 11, push notifications are becoming much more advanced — to the benefit of both users and app marketers. In iOS 10, notifications allowed apps to include media previews like photos and videos. But in iOS 11, apps allow you to engage with notifications while the device is still in a lock screen. For example, you can watch, like or comment on video or photos, without actually opening the app. You could even have a full chat within the notification. Also, you can choose to make some types of notifications private, provided the app supports this feature, so that others won’t be able to see the contents without unlocking the device. For us developers, this means rethinking how we use notifications and the types of experiences we want to offer outside of the app itself.

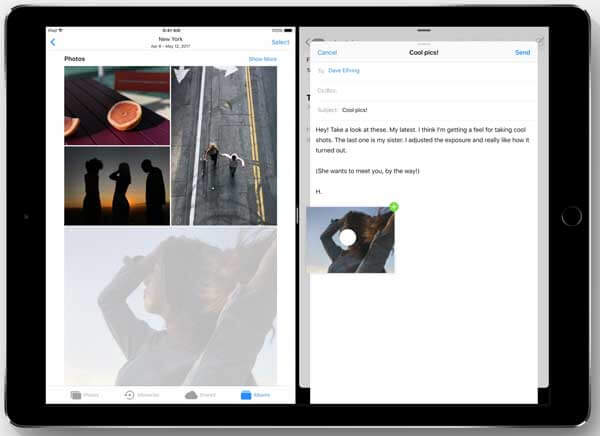

Drag and Drop

Apple’s new Drag and Drop for iPad is a new tool that, like it sounds, allows users to move items across different apps in a similar way to what we experience on our computers. But applied to the touch screen, Apple created a couple of nifty sub-features. For one, users can grab a piece of content by pressing and holding on it — and then it becomes pinned to your finger. Another wrinkle, you can grab multiple pieces of content using different fingers. Apple has yet to enable the feature on iPhone, though it reportedly exists and is not accessible via the user interface, so expect that Apple may eventually give developers access to it.

To take advantage of this feature on the iPad, your app needs to support it — both the selection of content within your app and the ability of your app to receive files that just land inside it.

This is particularly interesting for productivity apps — especially ones where pieces of related content can be leveraged between them, like Google Docs or Office 365. But for any app that wants to leverage photos from an iPad’s camera or where users create content to share on email or social media apps, this will be a feature developers should support.

SOURCE: Apple

All new Swift compiler in Xcode 9

Every year alongside the release of its new OS, Apple also releases a new version of its integrated development environment (IDE), Xcode. Normally, this doesn’t have a direct impact on businesses creating the apps or the user experience, just the developers. But this year, Xcode 9, among other great new editing tools, includes a brand-new compiler written specifically for Swift, Apple’s now 3-year-old programming language. This new Swift compiler promises to improve build times and file indexing, two areas that are constant sources of complaints within the developer community. Improvements like these are important to keep Swift moving forward and increase adoption. And, if it truly does improve bottom-line efficiency, that can save companies development time and cost.

Is your app ready for iOS 11?

There are lots of new features to take advantage of — and there is also a chance, as with any release, older features may break. If you have a current iOS app and are thinking about updating it for iOS 11, we’re happy to take a look at your app and offer up some free advice. Let’s set up a time to talk.